Best On-page SEO Techniques to Improve Website Search Rankings

Also, let’s not deny this; organizations today are spending thousands of dollars on internet marketing to stay visible on the search engines with a prominent spot. And of course, to be the best among the millions of other websites, the SEO strategies should also be commendable, right?

Still confused about SEO?

Let’s learn a lot then.

What is SEO?

‘SEO a.k.a search engine optimization is a way of making a website more credible for the search engines.’

SEO is all about earning qualified visitors for better conversion rates and revenue. But it’s also a fact that it takes a lot to make users move beyond the first page of a website.

In this blog, we will be learning about some of the best on-page SEO methods that will help websites gain better upfront and search rankings.

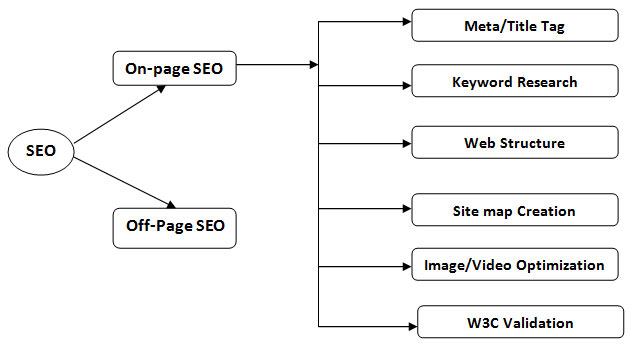

The Framework

Advanced On-Page SEO Techniques you should know

To speak colloquially, search engines are very particular about the quality of websites. If you need the proof of intensity just do some research on the number of algorithms Google has spit out and of course the impact of penalties.

A website should be credible both internally as well as externally. And on-page SEO helps maintain the internal quality of a website.

On-page optimization focuses on elements within the website as shown in the graphical representation. Speaking of which, we are not new to these terms I believe, so let’s move beyond working on page titles, Meta descriptions, and header tags.

Stepping towards improving the search engine rankings. What to look for?

- Building Internal Link Structure

- ‘Content is king’ but not without links, be it through internally or externally. Yes, even content with good quality will not make it to the heart of the readers unless it is deeply informative, meaning deeply linked. We will speak about backlinks some other time, for now; let’s look into the internal link structure.

- Google search bots sets on a mission to crawl and index a website and a proper internal structure helps perform that. The site architecture is very crucial for both search engine and users.

- Why are internal links essential?

- i. Users can easily navigate through the website.

- ii. Information will be presented with better hierarchy.

- iii. The flow of link juice from one web page to the other (around the website).

- Better link structure defines the quality of a website and keeps users much longer on the website. The longer they stay the better the chances of them doing business with you.

- Performing Latent Semantic Indexing (LSI)/ LSI Driven Keyword Research

- Keyword researches, checking for keyword density, well, let’s do something beyond the conventional research process.

- Basically, the word LSI looks overwhelming but it’s not. The whole concept here is to identify LSI keywords and add them to your content. By doing that Google can provide more relevant search results to the users.

- Breaking the words latent semantic indexing, we get,

- Latent – Structure Analysis

- Semantic – Meaning/interpretation of words/relationship b/w words.

- Indexing – adding crawled web pages to the Google Search (in terms of SEO)

- Let’s go with a more typical definition, shall we?

- What is latent semantic indexing?

- ‘Latent semantic indexing is a mathematical process of finding the relationship between the terms and concepts. Correlating words are identified and considered as the keywords of the web page.’

- As said before, LSI helps in determining the relation between terms and concepts of content. It checks whether the keywords utilized in the document are semantically close or semantically distant. Suppose, if the page title has a keyword, LSI looks for synonyms for that keyword and defines the relationship.

- Benefit?

- Websites with LSI keywords will have better traffic and search rankings.

- Clearing the Root Folder

- It’s often a common practice for webmasters to interminably dump files in the root folders. And such practices do hurt SEO strategies as search engines look for root directories that have a leaner structure.

- But how do these junk files come up?

- It does not form overnight and most of the times it will be through,

- i. Backup Directories

- ii. Unused DOC/PDF Files & Temporary Files

- iii. Multiple File Versions

- iv. Trial Files

- Remember! A target page should not be more than two or three directory levels away from the root.

- Solution?

- Create a directory with the name like, ‘old-files’. Once you move all the unused files to this directory, update the robot.txt file with this command.

- Disallow: /old-files/

+91 8277203000

+91 8277203000